The jobs are processed on a Greenplum parallel system running SAS High Performance Analytics Server 12.1. The system has 32 worker nodes. Each node has 24 threads with 256GB RAM. The data set has 67,164,440, ~67 million rows. Event rate is 6.61% or 4,437,160. The data set is >80 GB. After EDA steps, 59 variables are entered to fit all the models.

One executive summary style of observation is : we are talking seconds, and minutes.

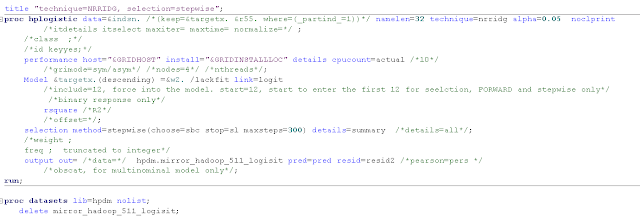

Below is sample code for fitting a binary logistic regression using HPLOGISTIC

The following is SAS log for the job running inside SAS Enterprise Guide project flow

While much of HPLOGISTIC's model output is similar to PROC Logistic from SAS STAT, some new output reflects strong and renewed emphasis on computational efficiency in modeling. One good example "Procedure Task Timing" is now part of all SAS HP PROCs. Here is example + details for three Newton Raphson with Ridge (NRRIDG) models.

Finally, a juxtaposition of several technique and selection mixes, iteration, time spent and AICC

Some observations from this 'coarse' exercise (refined, depth work is planned for 2014)

1. WHERE statement is supported. You can use PROC HPSAMPLE to insert a variable _partind_ into your data set. Then use WHERE statement to separate training from validation and test partitions. In this way, you don't have to populate separate data sets which now have big footprint

2. RANUNI function is also supported. Unlike PROC HPREG, though, as of today, PARTITION statement where you can leverage external data set for variable selection and feature validation, is not yet supported in HPLOGISTIC. The popular C statistic will be available in June 2014 release of SAS HPAS 13.1.

3. It becomes more and more obvious that the era of Fisher Scoring is moving on where most, if not all modelers, build one Exploratory Data Analysis (EDA) set to fit all techniques and selection. To max out what the big data set can afford for lift, one may need to build one set per technique. That the build using technique=NMSIMP crashed in this exercise examplified this. NMSIMP is typically suitable for small problems. It is recommended one samples down the universe. The technique best for large data set is Conjugate-Gradient Optimization, which is not shown in this blog (I should have one tested on Hadoop cluster ready for blogging soon)

4. Apparently it is not the case the more iteration the better performance. This seems also the case with random forest or neural networks. In other words, there ought to be a saturation lift point, for a given EDA on a given data set. One needs to reach that 'realization point' sooner than later to be more productive

5. Over data ranges such as 67 million, I doubt if HPSUMMARY, HPCORR... all the usual summary exercise is sufficient to understand the raw input at the EDA stage. Multicollinearity is another intriguing subject. The trend seems that it is being overridden by observation-wise swap test for best subsets of variables. Kernel estimation and full visual data analysis such as offered by SAS Visual Analytics are two other strong options.

6. Logistic regression, especially armed by facilities such as SAS HPLOGISTIC, opens the door for innovative modeling scheme. More and more are moving beyond "one row per account" to model directly on transactions. In the context of transactions, 67 million is really not considered big at all. So be bold. Because if today with HPLOGISTIC, if your performance still suffers, what is holding you back is hardware, not the software. (Well, if you insist on using SAS 9.1 of 2008, and refuse to move onto HPLOGISTIC of 2013, and keep on declaring 'SAS is slow', there is nothing anyone can do about it).

7. Analytically, if you build directly on transactions, instead of rolling up and summing up, you are purely modeling behavior. However, if your action requires account or individual level, how to roll up your transaction model scores to the level is interesting.

It is amazing and wonderful to visit your site.Thanks for sharing this information,this is useful to me...

ReplyDeleteEngineering Classes